I recently implemented an LRU cache in a personal project I'm working on, and I've been impressed by the benefits it brings to the performance and scalability of the system.

An LRU cache (Least Recently Used cache) is a data structure that stores a limited number of key-value pairs and automatically removes the least recently used item when the cache reaches its capacity. The LRU policy helps to optimize the use of memory and reduce the number of expensive computations or data retrievals.

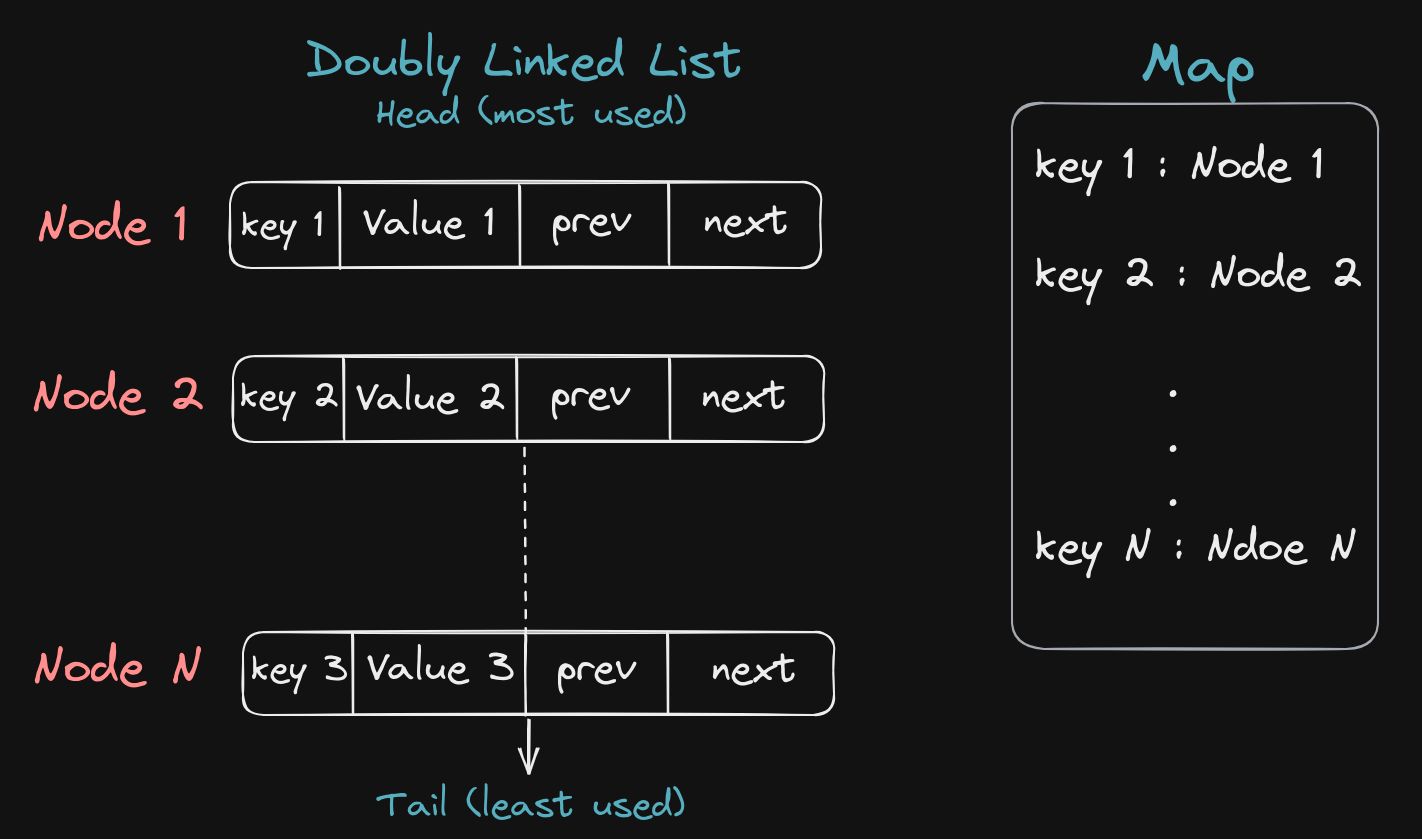

In my implementation, I used a combination of a linked list and a map to achieve constant-time O(1) operations for getting and putting items in the cache. The linked list keeps track of the order of access to the items, with the head being the most recently used item and the tail being the least recently used item.

class ListNode<K, V> {

key: K;

value: V;

prev: ListNode<K, V> | null;

next: ListNode<K, V> | null;

constructor(key: K, value: V) {

this.key = key;

this.value = value;

this.prev = null;

this.next = null;

}

}

class LRUCache<K, V> {

capacity: number;

map: Map<K, ListNode<K, V>>;

head: ListNode<K, V> | null;

tail: ListNode<K, V> | null;

constructor(capacity: number) {

this.capacity = capacity;

this.map = new Map();

this.head = null;

this.tail = null;

}

}

Whenever an item is accessed or added to the cache, it is moved to the head of the linked list, marking it as the most recently used item. If the cache reaches its capacity, the tail of the linked list is removed, effectively evicting the least recently used item from the cache!

remove(node: ListNode<K, V>) {

if (node.prev !== null) {

node.prev.next = node.next;

} else {

this.head = node.next;

}

if (node.next !== null) {

node.next.prev = node.prev;

} else {

this.tail = node.prev;

}

}

addToHead(node: ListNode<K, V>) {

node.next = this.head;

node.prev = null;

if (this.head !== null) {

this.head.prev = node;

}

this.head = node;

if (this.tail === null) {

this.tail = node;

}

}

get(key: K): V | -1 {

if (this.map.has(key)) {

const node = this.map.get(key)!;

this.remove(node);

this.addToHead(node);

return node.value;

}

return -1;

}

put(key: K, value: V): void {

if (this.map.has(key)) {

const node = this.map.get(key)!;

node.value = value;

this.remove(node);

this.addToHead(node);

} else {

const node = new ListNode(key, value);

this.map.set(key, node);

this.addToHead(node);

if (this.map.size > this.capacity) {

const nodeToRemove = this.tail!;

this.remove(nodeToRemove);

this.map.delete(nodeToRemove.key);

}

}

Using an LRU cache has improved the performance of my system by reducing the time and resources required to retrieve or compute data that has already been accessed. It has also helped to prevent the system from running out of memory or becoming unresponsive due to excessive data storage or processing.

If you're working on a similar project or looking for ways to optimize the performance and scalability of your system, I highly recommend considering the use of an LRU cache.

Check out the repo here. Leetcode question