What are we building

The primary goal of this simple application is to:

• Check the status of several URLs concurrently.

• Retry failed requests up to a specified number of times.

• Log the status of each URL to a log file and the console.

• Gracefully handle shutdown signals.

Global Variables

var (

maxConcurrency = 5

maxRetries = 3

retryInterval = 2 * time.Second

logFileName = "status_checker.log"

)

maxConcurrency the maximum number of concurrent HTTP requests.

maxRetries the maximum number of retries for failed requests.

retryInterval the wait time between retries.

logFileName is the name of the log file.

Log File Setup

I wanted to not only log the output to a file but also print everything to the terminal. To do this, I had to set up a logger for both stdout and the log file.

First, I remove the log file if it already exists. Then, I set up the logger for both stdout and the log file. Finally, I use defer to close the file after the program finishes running.

if err := os.Remove(logFileName); err != nil && !os.IsNotExist(err) {

log.Fatalf("Failed to remove log file: %v", err)

}

logFile, err := os.Create(logFileName)

if err != nil {

log.Fatalf("Failed to create log file: %v", err)

}

defer logFile.Close()

multi := io.MultiWriter(os.Stdout, logFile)

log.SetOutput(multi)

Setting up the context

Here we create the context with cancellation functionality, in order to allow cancel checks when the program exits. We also set up the context to receive signals like SIGINT (Ctrl + C) and SIGTERM (termination signal). Once a goroutine starts, it listens for signals on the channel that is created under the hood, and when such a signal is received, it logs a message and then cancels the context. Essentialy, we use context to gracefully shutdown the application as well as manage our resources as efficiently as possible by cancelling ongoing http requests to a website, if for example we press Ctrl + C and send a SIGINT signal to exit the program.

ctx, cancel := signal.NotifyContext(context.Background(), syscall.SIGINT, syscall.SIGTERM)

defer cancel()

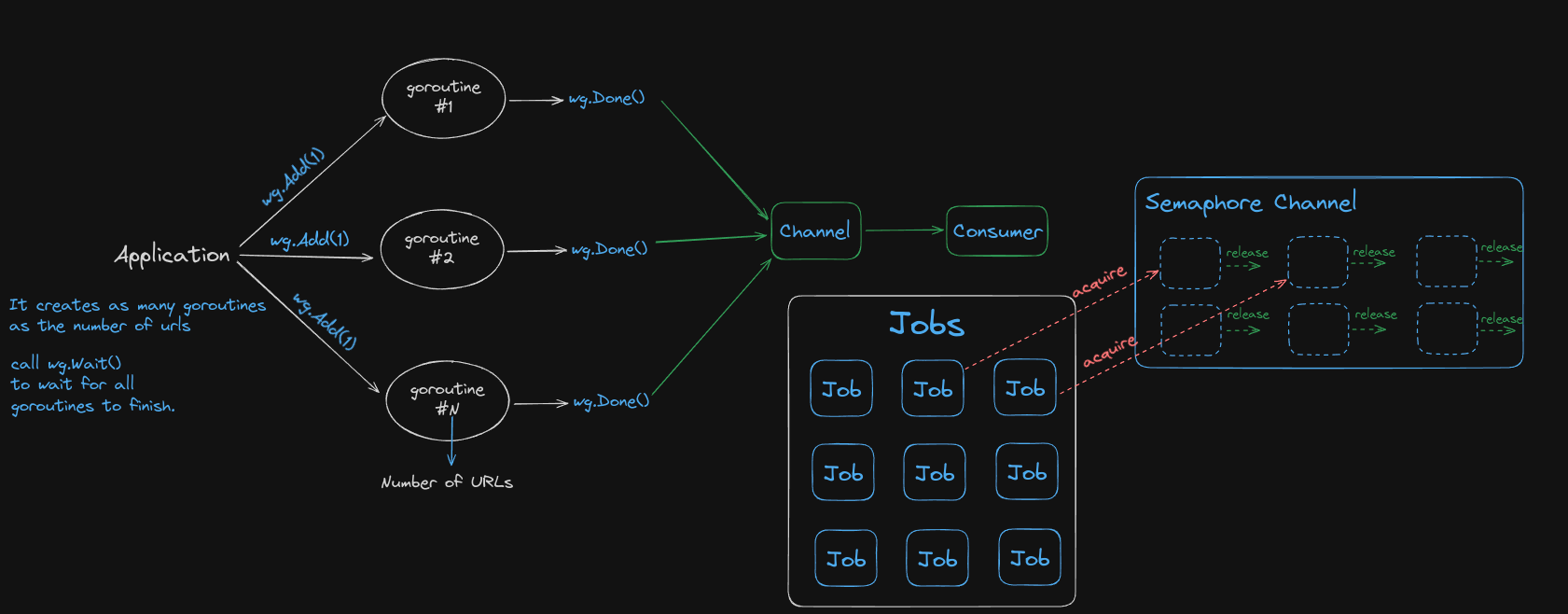

Concurrency Control

In this code snippet, we create the c channel to communicate between goroutines (explained later). We also create a WaitGroup to track the completion of all checks that will be done for every website.

Finally, we create a semaphore channel sem with a capacity of maxConcurrency to ensure only 5 checks run concurrently (goroutines).

c := make(chan string)

var wg sync.WaitGroup

sem := make(chan struct{}, maxConcurrency)

In the following code, for each link we start a new goroutine that launches a concurrent check for the link with a retry count of 0 initially.

for _, link := range links {

wg.Add(1)

go checkLink(ctx, c, sem, &wg, link, 0)

}

Pinging

This is our pinging mechanism. We start another goroutine that continuously listens on the c channel. When it receives a link on that channel (meaning the check has finished) it adds the link back to the wait group and launches a new check after 3 seconds (pinging).

go func() {

for {

select {

case <-ctx.Done():

return

case link := <-c:

wg.Add(1)

go func(link string) {

time.Sleep(3 * time.Second)

checkLink(ctx, c, sem, &wg, link, 0)

}(link)

}

}

}()

checkLink Functionality

This function is responsible for checking the status of a link. Let's start off with the arguments:

• ctx: context

• c: communication channel

• sem: semaphore channel

• wg: wait group

• link: link URL

• retryCount: current retry count

First of all, it decrements the wait group when the function finishes (using defer wg.Done()).

What's really interesting here though, is the way semaphore channel works:

Earlier we created a semaphore channel with a buffer size of maxConcurrency (5). The buffer size determines how many tokens (or slots) are available, which in this case, controls how many HTTP link checks can occur concurrently.

sem := make(chan struct{}, maxConcurrency)

We then, start off the function checkLink, by immediately sending an empty struct into the sem channel. The empty struct is chosen because it occupies zero memory, making it an efficient choice for signaling without carrying data.

By sending to the channel, a goroutine effectively acquires a slot in the semaphore. If fewer than maxConcurrency goroutines are already doing this, the send operation will not block because there's space in the channel's buffer.

If maxConcurrency goroutines have already sent to the channel (for example all slots are taken), this operation will block. The goroutine will wait here until another goroutine reads from the channel (releases a slot) and makes space in the buffer.

In other words, when trying to send (write) to a full channel, or trying to receive (read) from an empty one, the operation blocks and therefore the goroutine waits.

sem <- struct{}{}

This line appears right after acquiring the slot. The defer keyword ensures that this line will execute when the surrounding function (checkLink) exits, regardless of how it exits (normally or via an error), effectively incrementing the semaphore and freeing up a slot for another goroutine to use.

defer func() { <-sem }()

Then, it selects on the context channel (ctx.Done()) if the context is cancelled, it logs a message and exits the function.

Finally, it performs an HTTP GET request on the provided link and stores the response (resp) and any error (err). If there's an error,

it logs the error message and if retries are not exhausted retryCount < maxRetries, it logs a retry message, sleeps for the retry interval,

and calls itself recursively with an incremented retry count. If however retries are exhausted, it logs a message indicating giving up on the link.

Of course, in the end it sends the link back to the c channel, to trigger the pinging mechanism we mentioned above.

func checkLink(ctx context.Context, c chan string, sem chan struct{}, wg *sync.WaitGroup, link string, retryCount int) {

defer wg.Done()

sem <- struct{}{}

defer func() { <-sem }()

select {

case <-ctx.Done():

log.Printf("Context cancelled, stopping check for %s", link)

return

default:

}

resp, err := http.Get(link)

if err != nil {

log.Printf("Error checking link %s: %s", link, err)

if retryCount < maxRetries {

log.Printf("Retrying %s in %v (retry %d/%d)", link, retryInterval, retryCount+1, maxRetries)

time.Sleep(retryInterval)

checkLink(ctx, c, sem, wg, link, retryCount+1)

} else {

log.Printf("Max retries reached for %s, giving up.", link)

}

c <- link

return

}

defer resp.Body.Close()

log.Printf("%s is up, status code: %d", link, resp.StatusCode)

c <- link

}

Final Thoughts

By cancelling the context, all goroutines listening on the context channel (ctx.Done()) can be notified to stop their operations gracefully. We allowed for concurrent execution, by using multiple goroutines to check links concurrenctly, limited by a semaphore. Finally, we use channels to communicate between goroutines for the pinging and retrying mechanisms.